Where are all the vibe coding game devs at?

A tour through AI-assisted development in Unity, Roblox Studio, and Unreal helped me answer this question.

While the rest of software is having an AI-powered renaissance, it feels like the world of game development has been sluggish to embrace the sea change.

We’re now regularly inundated with headlines like Mark Zuckerberg: 50% Of Coding Will Be Done By AI In 2026, or Cursor writes almost 1 billion lines of accepted code a day. For all the justifiable criticism that has been levied at ‘vibe coding’, there’s no denying that AI-assisted development will significantly reduce development time when done correctly. And of course the tools and the models are only getting better.

With massive adoption and improvements seen elsewhere in the software industry, why does it feel like the gaming industry is slow on the uptake? You can find demos of devs ‘one-shotting’ games with a page of requirements, but these are mostly unserious prototypes, and examples of professional game studios using AI to massively speed up their workflows, at least on the surface, seem to be lacking (Rockstar certainly seems not to have gotten the memo). It’s possible that this is all happening behind closed doors, but based on conversations with industry friends I believe this unlikely to be the case at many studios. Probably the most successful example of a game being made primarily through AI-assisted development wasn’t even created by someone in the games industry.

fly.pieter.com, a game positioned as made entirely by/in Cursor

For developers who have spent any amount of time in a seemingly callous industry run less and less by people who actually make games, the reason for this might seem obvious: Why speed up the adoption of a technology that may ultimately supplement my way of making a living? Here then is where I’d like to acknowledge that pointing out a lack of AI-assisted development in the gaming community could come across as insensitive given the level of turmoil it has experienced over the past few years. But I don’t think sensitivity is ultimately a good reason to not talk about something that will eventually upend the entire industry.

While the fear of AI causing unemployment in the industry may be justified, it’s also the topic of a different post. I think the bigger reason for lack of adoption might be that the AI tools for making games just aren’t that good yet. Building a prototype of a virtual world is a lot more complex than making a B2B SaaS landing page, and an AI code editor can only accelerate one pillar of the multi-disciplined game prototyping process.

To get a better sense of the state of AI-assisted game development, I took a tour through the AI-assisted game development landscape by building a game prototype in three of the most popular game engines.

A tour through AI integrations in modern game engines

Game development consists of a number of moving parts, like 3D model development, voice acting, sound design and so on. To assess the state of AI-assisted development for each of these areas would take way more time than I wanted to allocate so I had to be targeted in my approach. The piece of AI-assisted development I was most interested in is the one most crucial for prototyping: placing objects into a 2D or 3D world and creating the rules and logic for how the user and those objects interact with each other.

With the exception of a few niche genres, this is how game developers start experimenting to see whether a game has legs. So while there’s plenty of opportunities (and tools being developed) for creating assets used in game development, I went into creating these prototypes with existing assets that can already be found for free online.

The game I tried to prototype

To test out these features, I decided to prototype a turn-based combat game using tanks. Think XCOM meets World of Tanks. Since I’m not really interested in asset creation here, I downloaded free existing assets from Unity’s tutorial website. I wanted my prototype to consist of a simple level for testing out my idea (a fuller overview of my game design doc can be found here). My goal then was to explore the existing AI tools available to see how fast I could make this prototype.

Unity

Open Source MCP

My first stab at developing a game in Unity started out with using an open source Model Context Protocol (MCP). If you’re not familiar with them, MCPs are effectively an API an LLM can use to manipulate code in a structured way. You host this API locally on your machine, and it acts as the bridge between where you chat with your LLM (something like Cursor) and the thing you want that LLM to manipulate (something like Unity).

I connected an open source MCP and asked Cursor to please set up a basic level with the assets inside the project. I wanted a basic ground floor with some walls around it to serve as the level’s boundaries. It was able to fetch the assets and put them into the scene, but they were placed completely haphazardly.

First stab at vibe-coding using an Unity MCP. Nailed it.

I don’t think this had anything to do with the LLM models or the MCP itself, the latter which is well designed and created by an independent dev. It’s more that the models are not fine tuned on these tasks specifically, and that Unity’s APIs aren’t built with AI in mind. This makes leveraging MCPs in Unity sort of an uphill battle.

I felt I got the gist of working with MCPs in Unity with the one query and went on to trial Unity’s native AI integration.

Unity Muse

Last year, Unity released its very own AI-integrated product called Muse. The product costs an additional $30/month on top of the typical Unity plans, and does a number of different things, including game asset generation. But the Muse feature I was most interested in was Chat, which is something akin to having Cursor in Unity in that it can reference files in the project, look at its settings, and so on.

It’s possible that because building something with Cursor for the first time felt like actual magic using Unity Muse for the first time felt a bit underwhelming.

As with the MCP, I asked Muse to go ahead and put my terrain in the scene and populate it with a few trees that I could use as my first level. Rather than actually doing, it gave me step by step directions on how I would go about doing this myself. But even those directions it got wrong: rather than tell me to use my existing assets, it told me to create generic Unity 3D objects.

I was off to a rough start with Unity Muse, but eventually found it to be a useful-ish tool. It helped debug an issue as a result of my existing project settings, and helped me figure out that a window was missing because I hadn’t yet downloaded the relevant package. I could have used any LLM to go through some debugging steps to solve these issues (or thought about it myself for 2 seconds had LLMs not atrophied my brain), but it was nice to talk to one that could identify my problem directly because it had access to the project’s files and configs.

Unity’s Muse helped me put together a quick prototype. Just don’t expect Cursor level quality.

The product still certainly has a lot of issues. I ran into a random unidentified error, an issue with the LLM outputs kept getting truncated, and the occasional direction that was just plain wrong. Muse is still in its infancy, and I can see how it might be useful to those not very familiar with Unity or maybe to professional developers once the rough edges have been smoothed out. Having said that, over the roughly 4 hours of prototyping, I found myself going back more and more to Cursor when I needed to actually build out functionality. I feel that Muse should eventually outperform Cursor since it’s an AI product designed specifically for Unity, but it certainly doesn’t feel like it’s outpeforming Cursor today.

Roblox Studio

Like Unity, Roblox has its own natively integrated AI system called Assistant. It comes with the product for free and doesn't require any extra download. So as far as I was concerned, it could only be a plus.

The first thing that I immediately noticed about the Roblox Assistant was that it was much more autonomous than Muse. I gave it instructions and it created models and functionality for how those models should interact all by itself.

Roblox Studio’s assistant was able to cobble some assets together on its own, even if it looks a bit silly.

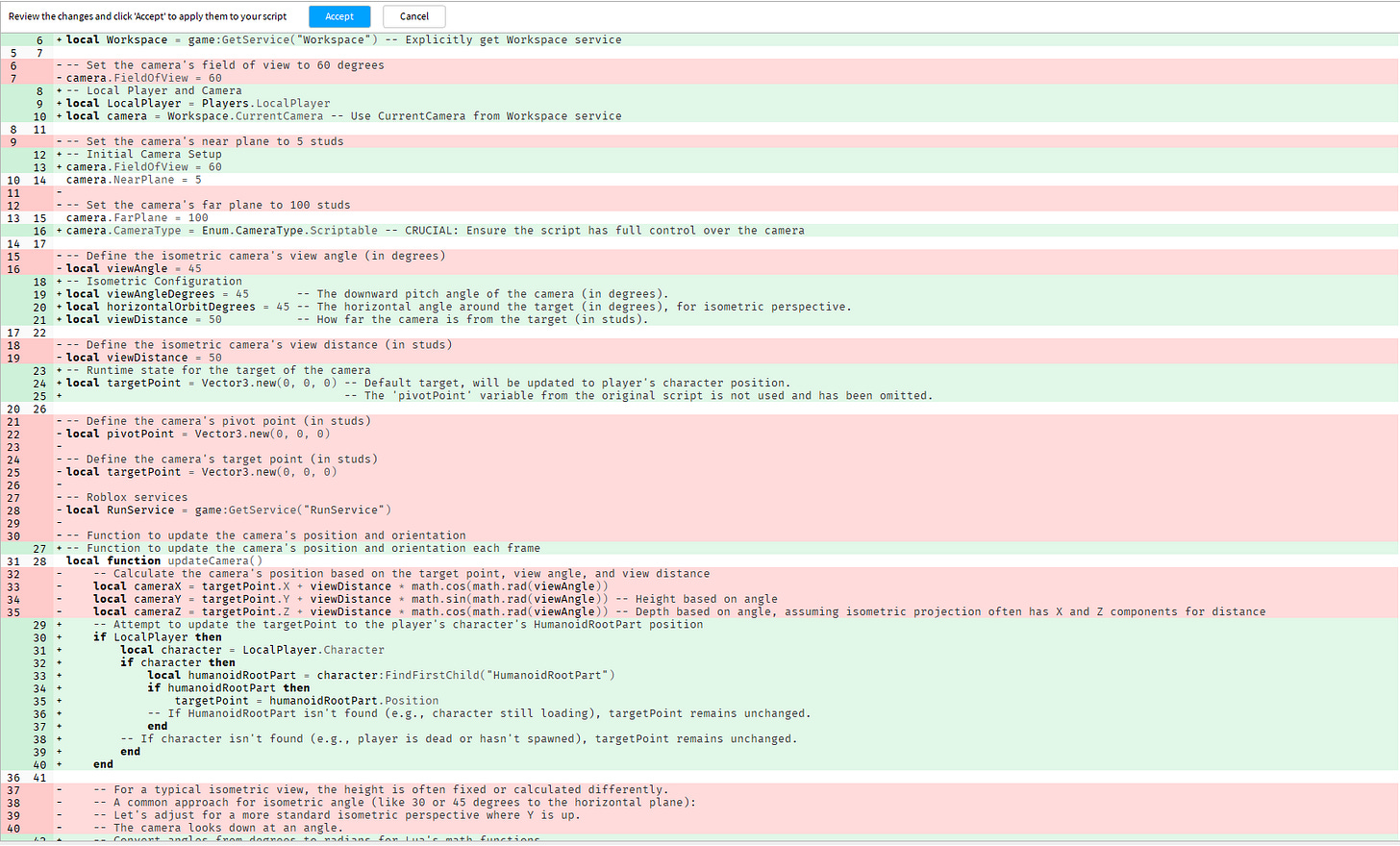

When I would give it subsequent prompts to improve the functionality it would make changes to the existing code and generate file diffs, which effectively just show what has been removed and what has been added to the file.

Number one vibe coding rule: always and unquestionably accept AI-generated file diffs

But things went off the rails pretty fast when I tried to get it to make an isometric camera view. Roblox requires that your camera be centered on your avatar, so to make an isometric, turn-based game you have to be a little creative. Still, it’s not something others haven’t done before. The solution in this case was to just have the camera view my avatar from the isometric angle and have it serve as the focal point for the camera. To do this took a bunch of prompting before finally getting it right. My final requirement was having the camera spawn in a different location than the default one, which meant moving my avatar spawn.

To do this, the Roblox Assistant told me to pull up the Palette window and drag out a new spawn point into the scene. I’m not familiar with Roblox Studio, so had to do some looking around. When I couldn’t find it, I asked the Assistant where to look. It responded that I should pull it up with a hotkey that was muscle memory to me: the hotkey for opening up the palette in VS Code, arguably the world’s most popular code editor. Rather than intentionally trying to gaslight me into believing in something that didn’t exist, the Assistant simply made the understandable mistake of believing it to be in the wrong piece of software.

There’s no ‘palette’ window in Roblox and that shortcut seemed awfully familiar…

Since I wasn’t familiar with Roblox Studio, and realizing that I wasn’t going to get very far with this hallucinating Assistant, I decided to pump the brakes on completing my prototype about an hour into developing it and go on to building it in Unreal.

Unreal

For whatever reason, Unreal doesn’t yet seem to have any sort of native AI support. Maybe Epic Games saw implementations elsewhere and decided that they weren’t going to create their own solution unless it worked really well. I’m not sure.

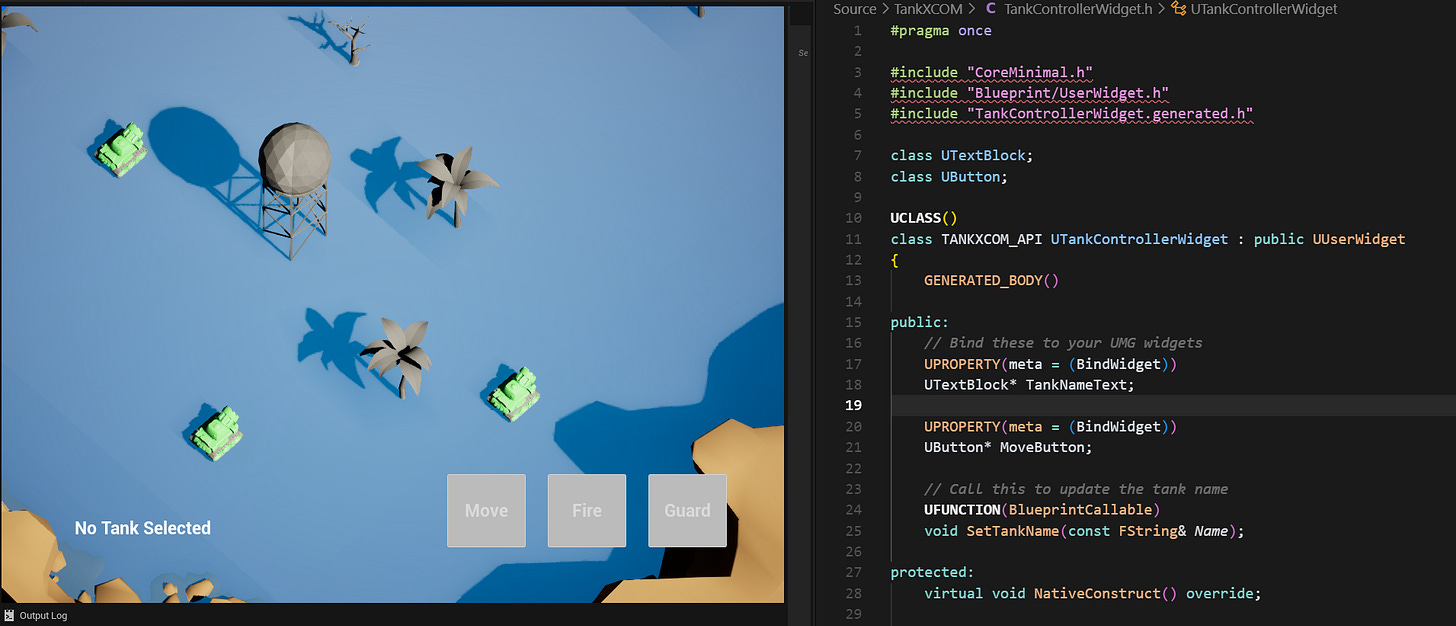

In any case, I booted up a new project and selected C++ as the project default when prompted to choose between the archaic programming language and Unreal’s notorious no-code solution called Blueprints. This choice used to feel like choosing between a root canal and a tax audit, but because LLMs are pretty good at coding and don’t really care for UIs, C++ felt like a significantly better choice this time around.

Because the Unreal engine doesn’t have a built-in AI assistant, I ended up using a 3rd party Ludus AI, which is just an OpenAI wrapper that functions as an Unreal plugin. The product doesn’t offer much in the way of integration. It doesn’t manipulate anything for you, or allow you to reference objects. It effectively just serves as a window in Unreal for OpenAI models. Worse yet, it ended up creating issues compiling my project that caused me to spend a fair bit of time debugging before figuring out the source. At this time, I wouldn’t recommend it.

As a result, and as in my other experiments, I went back to using Cursor. Even though I was creating most of the functionality using C++ rather than Blueprints, using Cursor to make the prototype was a bit onerous for a couple of reasons. First, Cursor can’t easily create files for you because the Unreal editor’s build system will only recognize files made inside the editor. This means I had to open the editor, right click, create a C++ file, and save it before returning to Cursor. Sigh. Second, I had to compile my project using Visual Studio, an entirely different editor altogether. There are workarounds for both of these issues but they’re not at all obvious.

Cursor made developing with C++ in Unreal a lot more pleasant

Despite these road bumps, Cursor did significantly simplify building with C++ in Unreal. While Blueprints remain suitable for things like UI, I found myself less tempted to create extensive functionality or logic with them, which I would usually come to regret. Instead, I’d just use Cursor for what it does best by describing exactly the type of functionality I needed in each file. Where relevant, I would then hook them into the Blueprints or game objects that can be interacted with in the scene view.

Final Thoughts

Given my underwhelming experience testing out AI-assisted development, I’m not surprised that it hasn’t gotten as much adoption with game developers as it has with other software developers. If you want to create a draft of a script or something else simple, it works great. But the tools just currently aren’t mature enough to do much of the work required for game prototyping.

Of the three game engines I’ve tried, Unity was probably the best experience, but I still think it has quite a bit of a way to go before being super useful to professional game developers.

In the meantime, if you want to make fast game prototypes using AI, you’re probably better off using a javascript framework like three.js and an editor like Cursor or Windsurf. Provided that your prototype doesn’t have crazy requirements, you can quickly make it there, and once you feel satisfied with the results, actually build it using something like Unity, Roblox Studio, or Unreal.

As it stands, I wouldn’t use one of those engines if you’re thinking you can bang out a quick game prototype using AI. This seems like a pretty massive opportunity, and one that I bet we’ll see tackled over the next couple of years, either from one of the above incumbents or a start up. I guess we’ll see.